The use of AI systems in the employee selection process is becoming increasingly widespread.

As of early 2026, approximately 87 percent of companies use AI in one form or another to streamline recruitment processes.

This trend is driven, in particular, by factors such as financial and time efficiency, as AI can speed up hiring by 30–75%, depending on the workflow.

However, these are far from the only advantages of its use. As statistics shows, 70% of recruiters say AI boosts efficiency by reducing manual screening time, AI helps reduce cost per hire by up to 35%, improving hiring ROI, and recruiters using AI-assisted outreach are 9% more likely to make quality hires.

Alongside the positive experience for employers, the use of AI in the hiring process may also have negative consequences. In particular, the use of AI in employment – especially in recruitment, constitutes decision-making that affects the terms of work-related relationships, which has a significant impact on individuals’ future career prospects, livelihoods, and workers’ rights.

As noted in the EU AI Act, throughout the recruitment process and in the evaluation, promotion, or retention of persons in work-related contractual relationships, such systems may perpetuate historical patterns of discrimination, for example against women, certain age groups, persons with disabilities, or persons of certain racial or ethnic origins or sexual orientation. AI systems used to monitor the performance and behaviour of such persons may also undermine their fundamental rights to data protection and privacy. The social side corresponds accordingly – 37% of HR leaders cite data privacy as their biggest concern regarding the use of AI, and only 26% of job applicants trust AI systems to evaluate them fairly.

Therefore, with the adoption AI Act in 2024, European regulator determined AI systems intended to be used for the recruitment or selection of natural persons as a High-risk category, which makes them subject to stricter legal requirements and penalties for non-compliance.

However, we acknowledge the need for companies to allocate their resources efficiently in the hiring process, as well as the legitimate intention of employers to use AI tools. This article has been developed specifically for AI deployers who intend to use such tools in their recruitment processes, with the aim of supporting responsible and compliant deployment.

Common AI use cases in recruitment

AI systems are increasingly used across the recruitment lifecycle, from candidate sourcing and initial screening to assessment, interviewing, and post-offer coordination.

While many AI recruitment platforms operate primarily as keyword-based matchers, recent experiences in 2025 show that certain tools – such as Fonzi AI, Carv, HireVox.ai, Recruitment Intelligence, and JusRecruit, can genuinely improve pipeline quality and reduce screening time by learning from recruiter feedback, understanding technical requirements, and handling niche or specialized roles.

Examples of AI usage in recruitment can conditionally be divided according to two criteria – the stage of the recruitment process and the functional role performed by the AI system.

- By stage of recruitment, AI tools may be applied at different points in the hiring lifecycle, including candidate sourcing, initial screening, assessment, interviewing, selection, and post-offer coordination. The level of impact on hiring outcomes varies depending on how early and decisively the system is used in the process.

- By functional role, AI systems may serve distinct purposes, such as automating administrative tasks, supporting decision-making through recommendations or scoring, or directly influencing hiring decisions by ranking, filtering, or predicting candidate suitability.

“High-risk AI system” under the AI Act

The EU AI Act adopts a risk-based approach, assigning compliance obligations in proportion to the potential risks that AI systems pose to health, safety, and fundamental rights. Such categories include: unacceptable risk (prohibited AI systems), high risk, limited risk, minimal or no risk (unregulated, including the majority of AI applications currently available on the EU single market).

As we previously mentioned, the Act specifically outlines, that AI systems used in employment, workers management and access to self-employment, in particular for the recruitment and selection of persons, for making decisions affecting terms of the work-related relationship should be classified as high-risk, since those systems may have an appreciable impact on future career prospects, livelihoods of those persons and workers’ rights.

Article 6 of the Act states, that AI systems referred to in Annex III shall be considered to be high-risk and provision of the Annex III (4) (a) specifies, that these refer to AI systems intended to be used for the recruitment or selection of natural persons, in particular to place targeted job advertisements, to analyse and filter job applications, and to evaluate candidates.

- Placing targeted job advertisements: AI systems identify and display job ads to candidates most likely to match the role.

- Analyzing and filtering job applications: AI systems review applications and resumes to shortlist candidates based on predefined criteria.

- Evaluating candidates: AI systems assess candidates’ skills, qualifications, or suitability for a position through scoring, ranking, or predictive models.

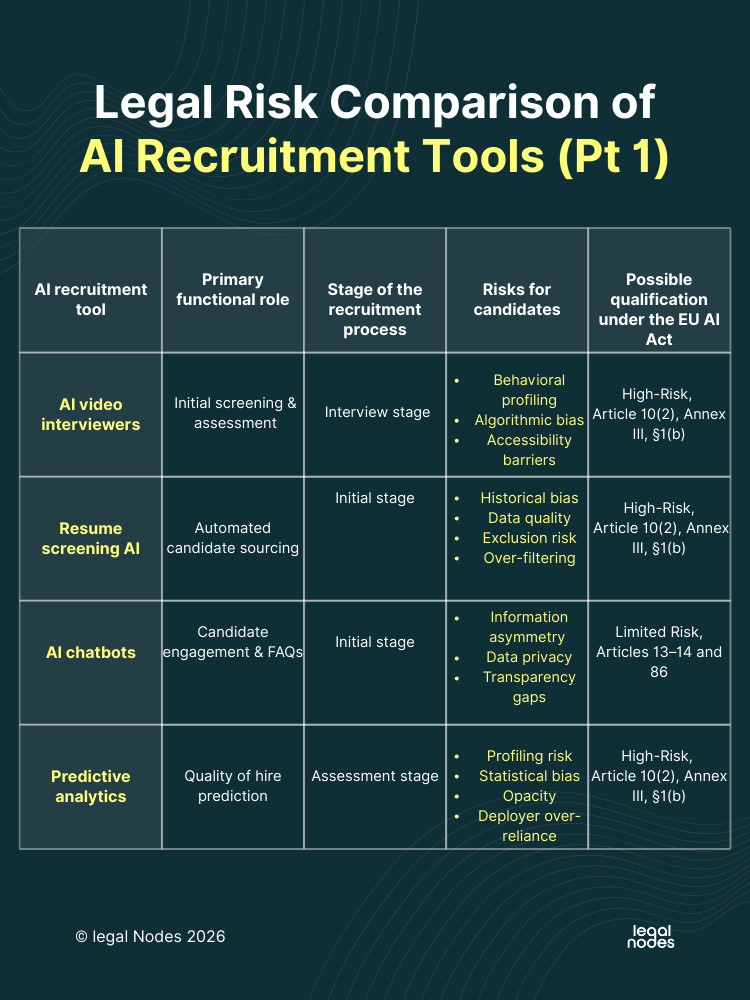

Please, see the following risks associated with particular AI recruitment tools and their possible qualification under the Act.

.png)

Therefore, vast majority of AI recruitment tools fall under the category of high-risk under the EU AI Act, and some of them are considered as limited risk, which also produces certain obligation regarding their use under the provisions of Article 86 (clear and meaningful explanations of the role of the AI system in the decision-making procedure and the main elements of the decision taken).

EU AI Act applicability to recruiters and HRs

The term “recruiting” derives from the French “recruiter” – to recruit, to hire.

Recruiters may refer to a company or organization that is looking for new employees, a specialized organization that finds people to work for companies, or someone who works for such a company or other entity which is involved in the work of personnel finding and selection.

In some companies an HR manager may perform the duties of a recruiter as well, but above all the focus of their work is onboarding, training and increasing the motivation of employees. Human resources, or HR, manage the employee life cycle, including recruiting, hiring, onboarding, training, performance management, administering benefits, compensation and firing.

Both of these categories are closely intersected in the context of using AI recruitment tools and can be described as natural or legal entities using AI systems in employment relations. As stated in Credential 57 and Annex III of the Act, AI systems used in employment, workers management and access to self-employment include in particular those, which are used in the recruitment and selection of persons, to analyse and filter job applications, and to evaluate candidates. Under the Article 3 of the AI Act, natural or legal persons who are using an AI system under their authority except where the AI system is used in the course of a personal non-professional activity are called “deployers”.

Chapter I of the EU AI Act establishes the conditions under which AI product usage falls under the scope of the Act. According to the Article 2 (1) (b) and (c), the Regulation applies to:

- deployers of AI systems that have their place of establishment or are located within the Union;

- providers and deployers of AI systems that have their place of establishment or are located in a third country, where the output produced by the AI system is used in the Union.

Please note, if the deployer (recruiter or HR) is situated in the EU, or the output (e.g. candidate analysis, screening result) is used within the the EU or EEA territory, the Regulation (AI Act) applies to their activities.

Requirements and fines under the AI Act

The legal obligations imposed by the EU AI Act on deployers of AI recruitment tools stem from the high-risk classification of the AI system and its specific application. Accordingly, these obligations constitute the general duties of deployers of high-risk AI systems.

If an AI recruitment system is classified as high-risk under the EU AI Act, deployers are required to implement technical and organizational measures to ensure the system operates according to its instructions for use. Section 3 of the AI Act outlines the obligations of providers and deployers of high-risk AI systems. Deployers must assign responsibility to competent individuals, monitor the AI’s performance, and maintain high-quality, relevant input data to prevent bias or errors. Deployers are also obliged to inform stakeholders and affected individuals about the use of the AI system, suspend its operation if risks arise, and report serious incidents to providers and relevant authorities.

The provisions on penalties under the AI Act exceed even those provided for in the GDPR. The maximum fine was set at EUR 35,000,000 or 7% of annual worldwide turnover.

Moreover, AI Act emphasizes that Member states should take all necessary measures to ensure that the provisions of this Regulation are implemented, including by laying down effective, proportionate and dissuasive penalties for their infringement. Member states will lay down the upper limits for setting the administrative fines for certain specific infringements. For example, Section 30(2) of the Labour Code of the Czech Republic, limits the scope of data processed prior to the commencement of employment exclusively to information directly related to the conclusion of the employment contract, thereby excluding common practices of AI tools such as: social media scraping, psychological profiling, collecting data on family circumstances. Given that, according to Section 316(4) of the Labour Code, employers may not request or obtain the above-mentioned information through third parties and if they do so, the operator will hold liability both under the AI Act, data protection and local labour legislation

Deployers of AI recruitment tools should ensure strict compliance with both the EU AI Act and national labor laws by limiting data collection to what is strictly necessary, implementing robust oversight and transparency measures, and documenting all processes to mitigate legal and financial risks.

EU AI compliance documentation and processes for responsible deployment

We recommend identifying and classifying all AI use cases at an early stage, determining the organisation’s role (provider or deployer), the system’s risk category, and whether specific transparency obligations or exemptions apply under the AI Act. This assessment should be properly documented to ensure that the deployer can demonstrate compliance if questioned by regulatory authorities or subject to a compliance review.

If the system falls under the high risk category:

- if you deploy a high-risk AI system listed in Annex III that makes or assists in making decisions concerning individuals, inform those individuals that they are subject to the use of such a system and ensure that it is clearly communicated to candidates how AI is used in the recruitment and selection process.

- assign human oversight to natural persons who have the necessary competence, training and authority. You should ensure that decisions are reviewed by a human being.

- to the extent you exercise control over the input data, ensure that such data is relevant and sufficiently representative in light of the intended purpose of the high-risk AI system, and document the assessment results.

- monitor the operation of the high-risk AI system in accordance with the instructions for use and, where applicable, inform the provider in line with Article 72 of the EU AI Act.

- ensure that automatically generated logs of the high-risk AI system under your control are retained for at least six months, or longer where required by applicable EU or national law, particularly data protection legislation, consistent with the system’s intended purpose.

- assess whether a Data Protection Impact Assessment under Article 35 GDPR is required and, where applicable, rely on the information provided pursuant to Article 13 of the EU AI Act to support and document compliance.

At the contractual stage deployers should ensure that providers commit to sufficient transparency, cooperation, and ongoing assistance to enable compliance with the AI Act. This should include clear allocation of responsibilities, access to necessary technical documentation, support with conformity assessments and audits, timely updates regarding material changes, and appropriate indemnities or risk-sharing mechanisms. Without these safeguards, deployers may find themselves carrying regulatory exposure without adequate visibility or control over the underlying system.